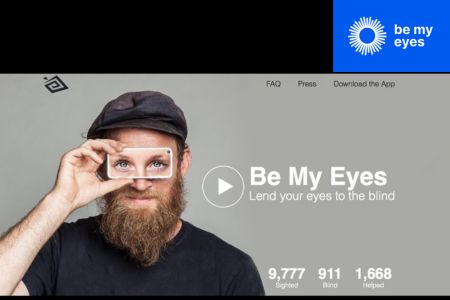

Having been a user of the Be My Eyes app for 5 years and having enjoyed the move to an AI version of the assistance app for vision impaired people, it was an absolute pleasure to welcome Hans Wiberg, the founder of Be My Eyes and Jesper Henriksen, the CTO to Inclusively.

What is Be My Eyes?

Be My Eyes (BME) is an IOS, Android and now Windows app that uses the device’s camera to create a one way video link and two-way audio link with a human volunteer or ChatGPT4 who can describe the image to a vision impaired person.

The History of BME

Hans got the idea from a friend who was using Facetime to get a friend to describe what his phone was pointed at. Having got funding and located in one of Denmark’s major vision impairment organisations, Hans tested the app with blind people and launched it in February 2015. It was an immediate success, signing up a thousand users and ten thousand volunteers on the first day. It now has 680,000 users and over 7.5 million volunteers around the world.

How it works

The blind person swipes until they hear ‘Place a call’ and places a call using the app, BME identifies where the person is, language they speak and then finds a group of suitable volunteers who are the polled to find some able to answer. By matching the user to volunteers locally latency is minimized and supports a clearer interaction. They typically find around 20 volunteers for the polling. Push notifications go to their phones that assistance is required and a link is opened up between the two parties.

Business model

There was no business plan as such but it was decided very early on that the service should be free at the point of service for the blind people. Eye sight should be free! And, a large proportion of blind people are in the so-called Global South and cannot afford expensive devices, services and support.

One of the employees came up with the idea of having a service directory. This is where companies like LinkedIN, Spotify, Google and Microsoft use the app to route blind customers to specialty helpdesk agents to assist with accessibility issues. And, of course, they pay BME for this service. Hence, allowing the charity to keep its promise of the service to blind people being free.

So, you can call a volunteer for your personal matters or you can link with a professional from a company for accessibility help with their products and services.

One of the smallest companies in the world, BME, has one of the biggest, Microsoft, as its customer!

Enter AI

In February 2023 Open AI phoned BME to ask if they would be interested in seeing how their LLM could help the blind community. Hans was a little skeptical about how well the LLM could recognize images and objects as there has been a lot of offerings claiming to be able to do this. But, in reality, Open AI blew them away with the detail and accuracy of description.

Post launch Open AI was also happy to partner with BME to help keep the service free to the blind community.. So, BME is able to maintain its free service at the point of usage. Today BME processes around 2 million pictures a month through GPT-4.

“Suddenly the blind community was at the forefront of the use of AI at such an exciting time.”

Technically, the smart phone camera is still used as the eye on the world. BME simply added the AI feature into the same app. It opens up a session with GPT-4, takes the image off and analyses it and then returns a description to the blind person. This is in a chat window and is easily turned into audio by the screen reader. It isn’t a view finder so the blind person can just point the device vaguely in the direction of what needs describing.

BME prompts the GPT-4 model that the person involved is blind or partially sighted hence a more detailed description is forthcoming. And, if the description isn’t enough, follow-up questions can be asked via voice or text, through a simple chat window, depending on the person’s preferences.

At the end of the Beta BME had 19,000 users on the system, giving feedback. This is why the app is so good at describing features, faces.

Many of the LLMs have restrictions due to regulatory and legal concerns about what can be described and in how much detail. Due to the benefits that this AI feature can bring to low-vision and blind users, OpenAI designed mitigations and processes that allow features of faces and people to be described by the BME product – providing a more equitable experience for them — without identifying people by name.

How about video?

Analysing video is the next natural step. In fact, according to Jesper, it is just a number of images being processed. What is unclear is the cost of analysing the multiple pictures required to describe a video and what connectivity quality might be essential to support it. In this scenario, the blind person will call the AI and have a conversation with it. You can see the BME Proof of Concept of this next stage here

Latency

Jesper points out that getting the response time under 5 seconds gets us close to having that realtime conversation with AI. This is the target but most often the models work at around 9 seconds which does involve some waiting from the blind users’ side. These interactions are multi-modal: you can talk to the model via audio or chat whilst the app is analysing your video.

The future

AI is only going to get faster, stronger, better – supposably cheaper! As well as analysing video and going multi-modal for interactions we are also on the cusp of models being deployed partially out onto devices such as smart phones and certainly at the edge of the network rather than just being handled back in the host data centre.

According to Hans, it will be possible in the near future, to provide a sustainable service to the blind community where the technology on the mobile phone, possibly via smart glasses, and other wearables, for all blind people.

Smart glasses are certainly an extension of the service. Many blind people already have their hands full with a white cane or guide dog so not having to hold up a phone in front of you would be an advantage. And, this reduces some blind peoples’ concerns about security when holding an expensive phone out in front of them for this sort of service.

Network connectivity and power consumption are the barriers currently facing the smart glass community but this is improving with every iteration. Wearables make a lot of sense.

Working with the telcos

Using BME as a customer experience mechanism is also a possibility. The telco can use the camera feature to help blind people install routers, set up the TV as well as trouble shoot problems. Sky Ireland is an example of this at work.

Going through BME helps set the expectation for both the blind person and the agent. The Sky Ireland agent, for example, can see the status on the router or how the screen is set up on the TV which can resolve the customer problem quickly without the need for a truck roll.

BME supports deep linking so BME can be launched from the web or from inside another app such as a telco super app. This would take the call directly to the company profile,. The BME link could be on the company web site ad launch the app when a customer clicks on it in the support part of the site.

For corporate customers the offering includes the human agent option as well as the AI option. Companies like Microsoft are seeing resolution rates of up to 70% using the AI features on their BME helpdesk.

BME is breaking down the barriers of accessibility and inclusion designed for categories of disabled people and also helping address multiple overlaps in the population when it comes to the sort of help they need. BME is designed for blind and low vision people. But, they know that people with colour blindness, dyslexia and other impairments are using it. Since it is free, no proof of disability is required so that group could grow.