I have been using the Be My Eyes app on my iPhone for several years to dial up one of their 7 million volunteers who can see through my phone camera to help me do things like set the aircon in a hotel, identify a bottle of wine, choose a shirt from my wardrobe or simply get some idea of my surroundings. The app has now added ChatGPT functionality in the form of image recognition and I can honestly say it has blown me away, as it has everyone I have demonstrated it to since it came out on Beta.

So now the app takes a photo and sends it off to ChatGPT to analyse the picture.

Here are a few examples of what it can do in less than 10 seconds:

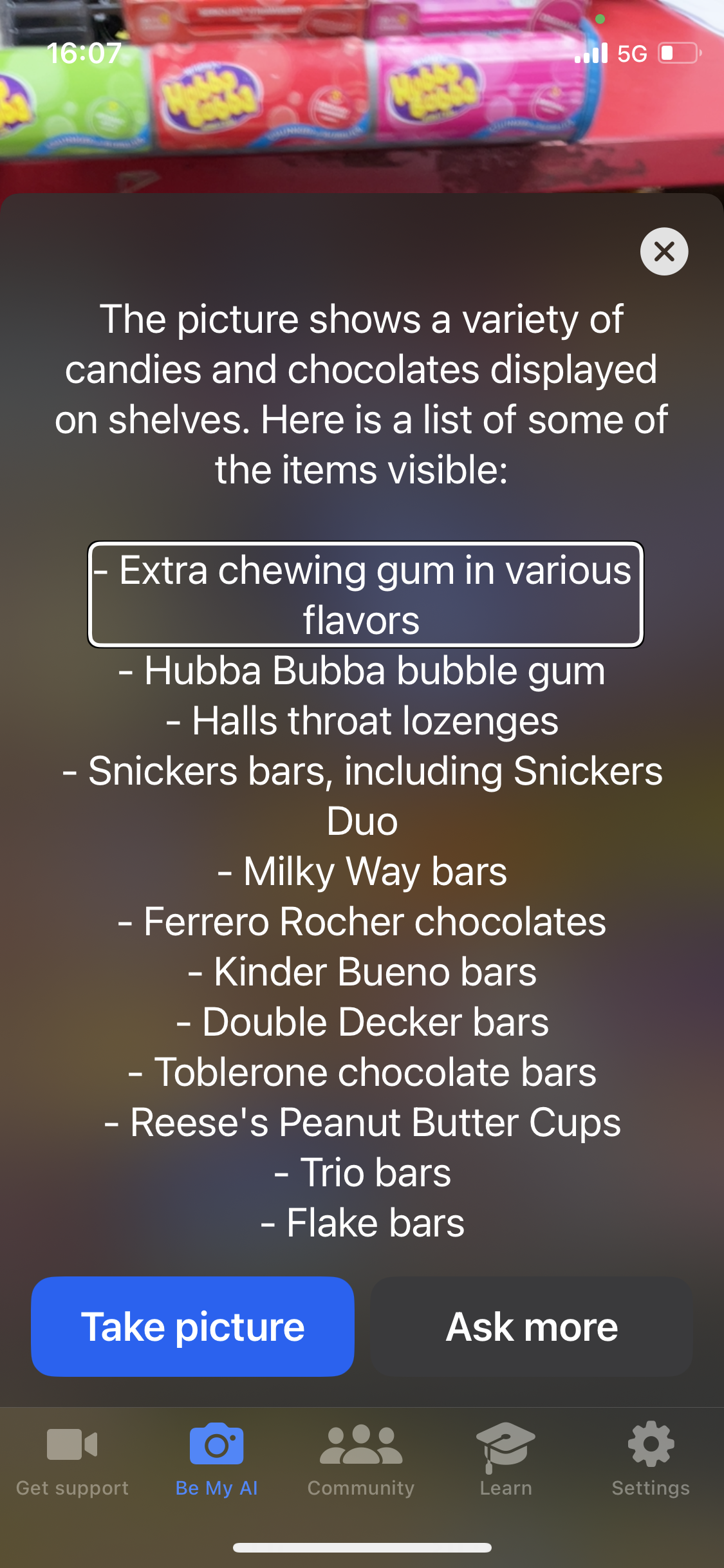

· I was looking for some chocolate at my local corner shop so took a picture of the confectionary display. The following shows you what happened.

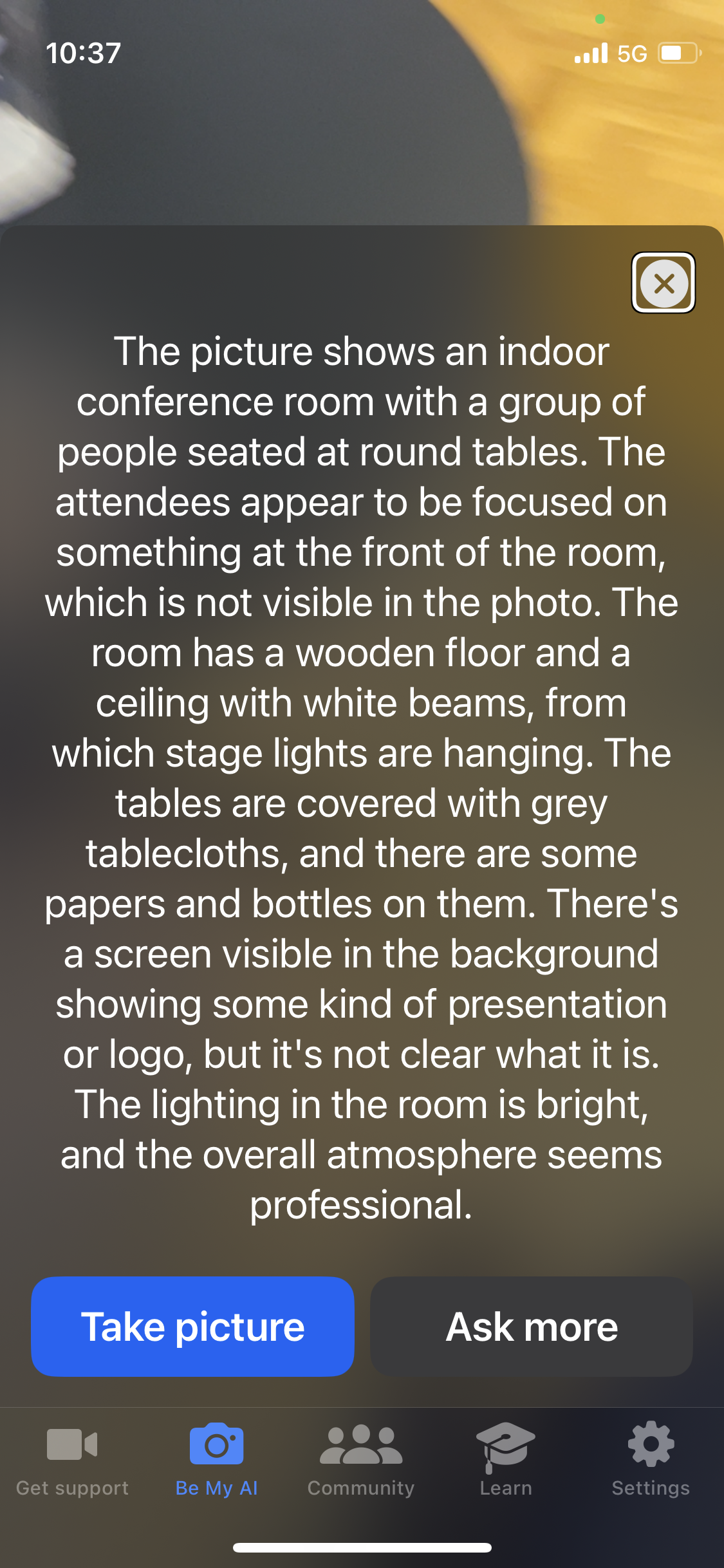

· At the recent Great Telco Debate in London, whilst sitting on stage I took a photo of the audience. The app provided the following description:

At least it said the attendees were focused on what was happening at the front of the room!

After the initial description the app offers the option to ask more specific questions via a chat function. This leverages the Iphone Voiceover screen reader which is my lifeline for interacting with all apps in my personal and business life.

I cannot over emphasise the potential impact of Generative AI for a blind person. It paints an audio picture of people as well as of situations. It brings what can be a very isolating condition to life in terms of understanding surroundings.

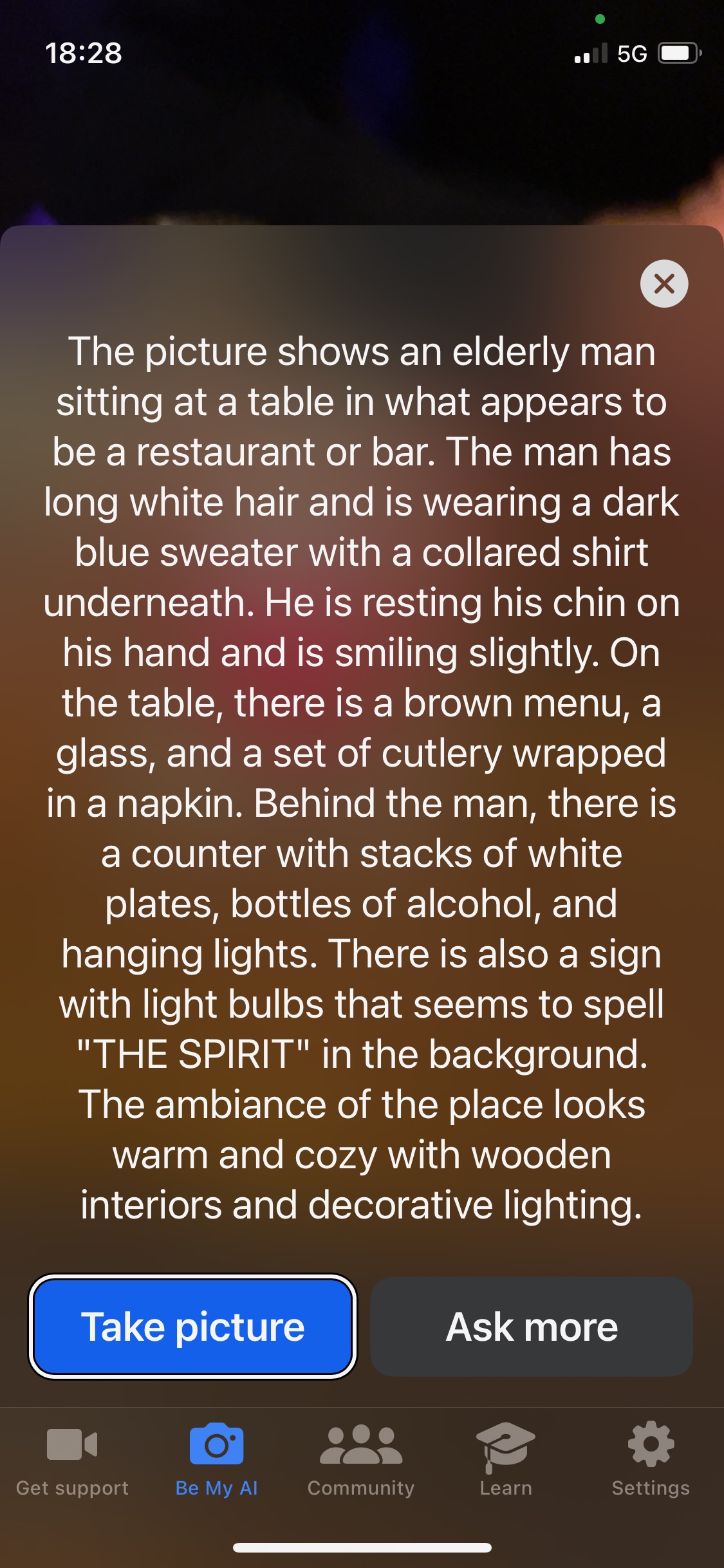

Mind you, I have learned to be careful pointing it at people as it can be very unflattering describing their features and ages! Perhaps this is due to the AI developers being young and their perception of people of around 60 being ‘old or elderly’. Hopefully AI will learn not to be too subjective/judgemental if it wants to keep the humans on side!

The ‘elderly man’ in question will remain unnamed but he’s younger than me!